The Story They Don’t Want Told

The Story They Don’t Want Told

On January 15, 2025, Rocky Mountain Health Plans (RMHP) crossed a line no insurer is legally or ethically permitted to cross. Acting under the corporate umbrella of UnitedHealthcare of Colorado (UHC-CO) and UnitedHealth Group (UHG), RMHP took five recorded patient calls—concerning denied coverage for gender-affirming care—and packaged them with protected health information (PHI): surgical history, psychiatric medications, and identity details.

They sent it to the Grand Junction Police Department (GJPD).

But what most don’t know: that was the second attempt. Internal routing metadata and correspondence indicate that UHC, operating at the corporate level, first sought to refer the same PHI to the Department of Homeland Security (DHS). When that federal referral failed to produce action, RMHP transmitted the same material locally to police.

Two bites at the apple—both without warrant, subpoena, or emergency justification.

And the question that matters most: why did they do this?

Why: Retaliation Disguised as “Safety”

The answer isn’t hidden in statute books. It’s political, personal, and strategic.

Silencing Advocacy. The patient—a trans woman—was documenting coverage denials, building a digital archive, and preparing litigation. AdministrativeErasure.org was already exposing UHC’s practices. Escalating her to DHS and police wasn’t about “safety.” It was about silencing speech and making advocacy carry the cost of fear.

Shifting Liability. At the time, both UHC and RMHP faced exposure over gender-care denials and potential HIPAA non-compliance. By flipping the script, they reframed the patient not as a whistleblower but as a threat—diverting scrutiny from their own misconduct.

Profiling a Vulnerable Target. Trans patients are disproportionately surveilled and stigmatized. RMHP exploited that bias, betting that law enforcement and media would default to suspicion rather than empathy.

This was not an accident. It was administrative erasure: weaponizing bureaucratic tools to erase dissent and credibility.

“Deny. Defend. Depose.”

Just days after Luigi Mangione allegedly shot and killed UHG CEO Brian Thompson, the patient used three words to describe what RMHP and UHC were already doing to her:

“Deny. Defend. Depose.”

Deny needed care—estrogen, surgery, coverage.

Defend the denial with corporate compliance maneuvers.

Depose the whistleblower by framing her as a threat and dragging her into court.

Those words weren’t rhetorical flourish; they predicted exactly what came next: escalation to DHS, then police, then litigation. They captured the corporate playbook—deny the claim, defend the denial, and depose the patient into silence.

Now preserved in the civil complaint, the phrase crystallizes UHC’s and RMHP’s retaliatory posture. It is the blueprint by which insurers turn patients into defendants, and defendants into erasures.

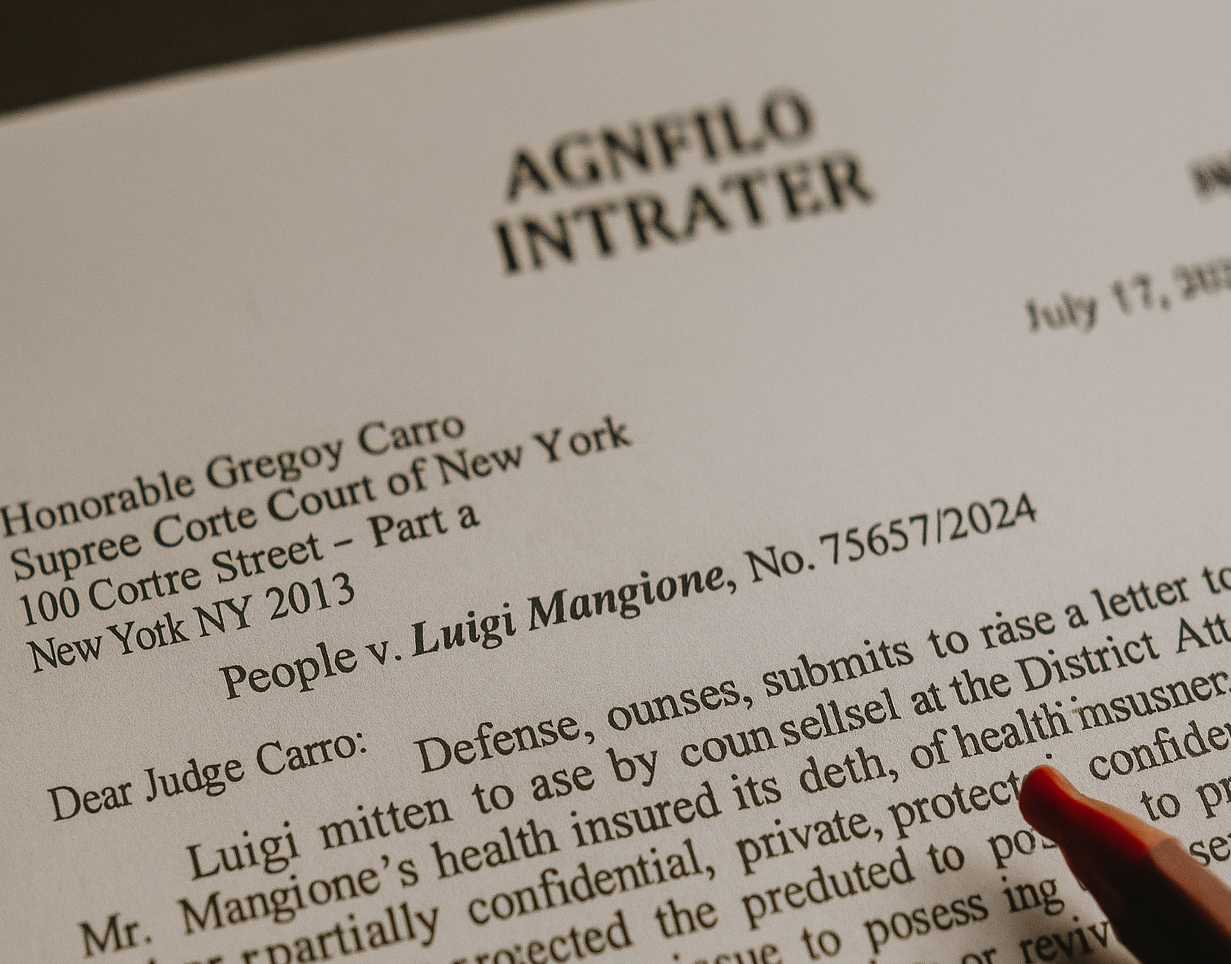

Exhibit L: The Paper Trail

The referral is memorialized in Exhibit L, a January 15 2025 cover letter from RMHP to the Grand Junction Police Department. It lists:

Five audio recordings of patient calls;

Identifying PHI, including surgical and medication details;

Language portraying the patient as a potential threat.

What it does not include:

A warrant;

A subpoena;

Any reference to imminent danger.

Exhibit L reads not as a compliance response but as RMHP’s calculated attempt to shift reputational risk from the insurer to law enforcement.

During follow-up communications, an RMHP employee reportedly acknowledged the impropriety, saying:

“I’m not supposed to do that — but it’s done.”

That single line captures the state of mind behind the disclosure: awareness of violation, followed by willful completion. It turns a policy failure into a conscious act.

The 35-Day “Myth” of Imminent Threat

HIPAA permits PHI disclosure without consent only under 45 C.F.R. § 164.512(j)(1)(i)—when there is a good-faith belief in a serious and imminent threat.

But RMHP’s own records destroy any “imminent” claim.

The last call was in December 2024.

RMHP waited 35 days before sending those calls to police.

Police closed the referral within 72 hours, filing no charges.

As detailed in Section XVI. The 35-Day ‘Myth’ of Imminent Threat, no threat is imminent after five weeks of silence. The delay itself proves the defense hollow.

From Algorithm to Accusation

This wasn’t the work of one rogue employee. UnitedHealth’s ecosystem runs on AI-driven monitoring platforms—Verint, NICE, and nH Predict—that flag patient calls for “risk” markers and auto-escalate them.

A distressed patient calling about estrogen-coverage denials was flagged not as vulnerable, but as dangerous. The escalation pipeline—UHC’s algorithms feeding RMHP’s local actions—was likely triggered by algorithmic misclassification.

Automation gave UHC cover: “the system flagged it.”

But what the system really did was criminalize distress.

Media Echo: How Retaliation Travels

The police dropped the referral in three days. But the damage didn’t end there.

In July 2025, a local news article resurfaced the incident. By sequencing events and omitting context, it presented the patient as “investigated for threats.” The investigation’s closure—the fact that no charges were ever filed—was buried.

This media echo effect amplified stigma while shielding RMHP and UHC. Neither insurer needed to speak publicly; the narrative they seeded had already taken on a life of its own.

Why This Matters Beyond One Case

Civil Rights. If insurers can send patients to DHS for coverage complaints, the chilling effect is obvious.

HIPAA Integrity. The 35-day delay and 72-hour dismissal expose how weak the “imminent threat” safeguard truly is.

Trans Profiling. Distress over denied care was repackaged as public danger, reinforcing toxic stereotypes.

Policy Urgency. Current law doesn’t prevent serial escalations—DHS first, local next—until some agency takes action.

This is not merely a violation. It is a blueprint.

Administrative Erasure in Action

The Administrative Erasure project defines this pattern: using bureaucratic processes to erase dissenters by reframing them as risks.

Escalation Ladder. UHC tried federal; RMHP tried local—until someone might act.

Narrative Laundering. They didn’t need to prove a crime; they only needed to seed suspicion.

Public Stigma. Once law enforcement was involved, even briefly, media could echo the narrative without liability.

What began as coverage disputes over estrogen and surgery became a manufactured threat investigation. That’s erasure, not error.

The Human Cost

This isn’t only about statutes and exhibits. It’s about a trans woman whose identity and medical history were sent to DHS and local police without justification.

It’s about waking up to learn your calls for care were reframed as “threats.”

It’s about carrying the stigma of an “investigation” even after it’s dismissed.

It’s about knowing your insurer can erase your credibility with one email.

That is the cost of proxy surveillance: fear becomes currency, reputation the collateral, dignity the loss.

Read the Full Report

The complete 23-page analysis, with exhibits and footnotes, is available here:

👉 Surveillance by Proxy: How UnitedHealthcare Evaded HIPAA Using Local Law Enforcement (PDF)

The Story They Don’t Want Told

The Story They Don’t Want Told